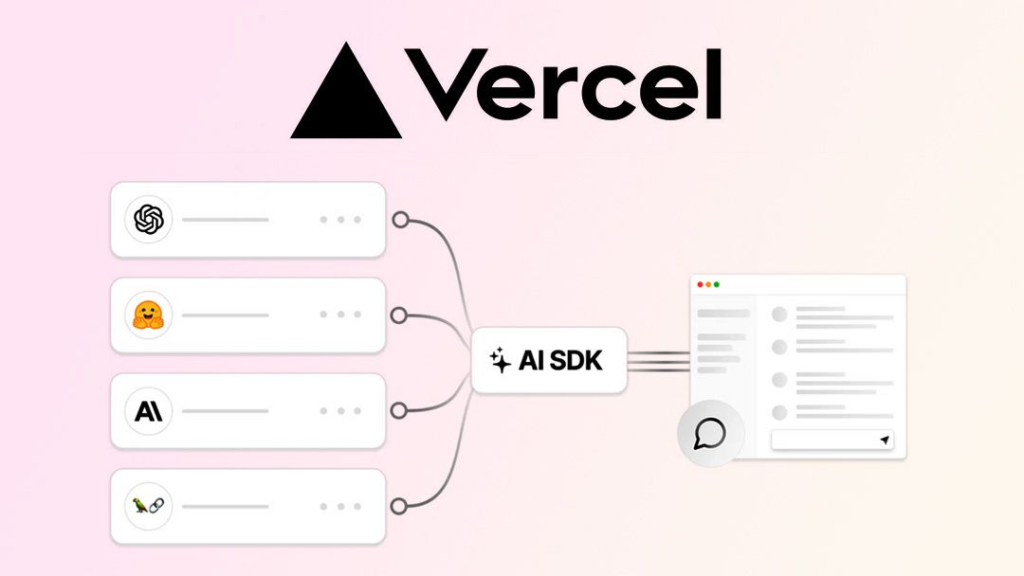

The Vercel AI SDK simplifies the integration of large language models (LLMs) such as OpenAI, Anthropic, and Hugging Face into Node.js applications. This SDK provides developers with a unified API for interacting with LLMs, handling streaming responses, and even utilizing tools like weather or database access to enhance chatbot functionality. Below is a comprehensive guide on how to use this package in Node.js.

Step 1: Install the Vercel AI SDK

First, install the AI SDK using npm. This library is the core tool for interacting with various AI providers:

npm install aiNext, you need to install the specific provider you want to use. For instance, to use OpenAI, install the provider package:

npm install @ai-sdk/openaiStep 2: Set Up Your Node.js Application

Create a simple index.js file to set up a Node.js application that interacts with an LLM. In this example, we will use OpenAI’s GPT-4 model for text generation:

const { generateText } = require('ai');

const { openai } = require('@ai-sdk/openai');

async function main() {

const { text } = await generateText({

model: openai('gpt-4-turbo'),

system: 'You are a friendly assistant.',

prompt: 'Explain how the Vercel AI SDK works in Node.js.',

});

console.log(text);

}

main().catch(console.error);Step 3: Stream Responses in Real-Time

One of the strengths of the Vercel AI SDK is its ability to handle streaming responses. This is useful for chatbots or real-time applications where you need responses as they are being generated.

Here’s how to modify the code to stream responses from OpenAI:

const { streamText } = require('ai');

const { openai } = require('@ai-sdk/openai');

async function main() {

const result = await streamText({

model: openai('gpt-4-turbo'),

messages: [{ role: 'user', content: 'Tell me a joke' }]

});

for await (const chunk of result.textStream) {

process.stdout.write(chunk);

}

}

main().catch(console.error);Step 4: Enhance Chatbots with Tools

The SDK also supports tools, which allow your model to interact with external APIs (e.g., weather, databases) and include the results in its responses. Here’s an example of adding a weather tool:

const { tool } = require('ai');

const { openai } = require('@ai-sdk/openai');

const { z } = require('zod');

const weatherTool = tool({

description: 'Fetch current weather in a city',

parameters: z.object({

city: z.string().describe('Name of the city')

}),

execute: async ({ city }) => {

// Mock weather data (replace this with an actual API call)

const temperature = Math.random() * 35;

return { city, temperature: temperature.toFixed(1) };

}

});

async function main() {

const result = await streamText({

model: openai('gpt-4-turbo'),

messages: [{ role: 'user', content: 'What’s the weather in New York?' }],

tools: { weather: weatherTool }

});

for await (const chunk of result.textStream) {

process.stdout.write(chunk);

}

}

main().catch(console.error);In this example, the model can generate a tool call to fetch weather data when the user asks for it. This allows your chatbot to answer more dynamic questions using external sources.

Step 5: Deploy on Edge or Serverless

The Vercel AI SDK is designed to be edge-ready, meaning you can deploy your AI-powered applications using Vercel’s Edge and Serverless Functions for scalability and performance. You can simply deploy your Node.js app using Vercel for instant global scaling.

You can also do a server and provide the IA function. To learn how create a server in NodeJS can read the next post.

Conclusion

By integrating the Vercel AI SDK into your Node.js applications, you can seamlessly incorporate LLMs with real-time response streaming, multi-model support, and tool usage for enhanced functionalities like API interactions. Whether you are building a chatbot or a generative application, this SDK simplifies the process while offering extensive flexibility.

For more information, you can explore the official documentation at Vercel AI SDK.